Tim MalcomVetter

Co-Founder / CEO

Where do Detections come from?

[Note: All graphs are estimates based on our experience and not to scale.]

I suspect most readers intuitively know where Detection Content, that is the rule logic that defines what bad looks like in a cybersecurity context, comes from. Maybe it’s not something you frequently think about, but you do know it. But do you know how we got here and why it matters?

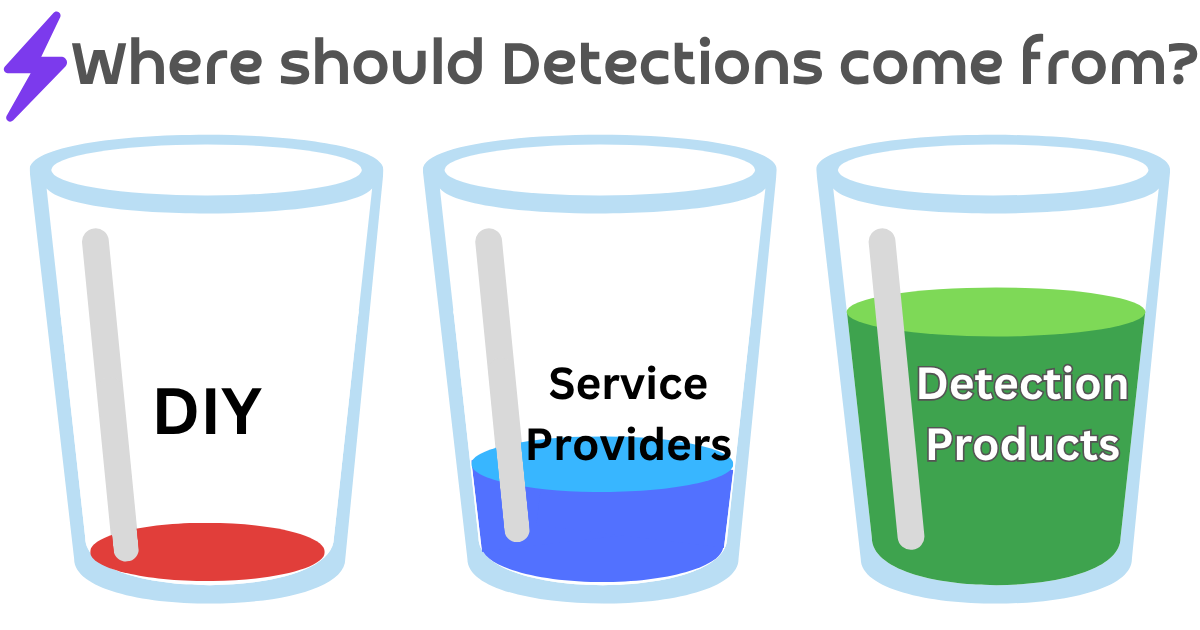

The majority of organizations get their detection content from one of three sources:

- DIY - the security detection engineering talent within their own team

- 3rd Party Service Providers, both one-time professional service engagements and more “continual” managed service providers

- Purpose-built Detection Products

The smaller and more resource-strapped the organization is, the more likely it will source detection content from service providers and products, and very little (if any) from within their own team. Conversely, the larger the organization, the more likely they will want to do their own detection engineering content generation, on top of a mix of products and services. Why that mix happens, we’ll save for another day.

In the late 1990s, “network security” professionals (as they were more likely called) focused almost exclusively on Prevention of incidents. I frequently recall my own personal naivete from that time period; I used to think all you had to do was “get the recipe right.” You know, the right mix of firewalls, encryption, strong authentication, patching, and you were fine. That was stupid thinking! The only people that I knew back then who thought about when these prevention controls fail were doing forensics, very right of boom. Nobody had floated the idea of Detecting bad quickly, yet (at least not to me!).

Back then, if something went wrong the next steps were to log into the affected server (which would have been a physical computer, not a virtual machine), and start picking your way through the file system, looking for logs. If you were good at it, you had some grep statements ready to go, but nothing was proactive. Everything was slow.

Eventually detections made their way into security programs, but the mix of Detection Content sources was quite different than today. Back when my career started, virtually all detection content was DIY, and service providers immediately stepped in to fill the gaps, followed by a more recent surgence in product based detections. Something like this graph:

#Circa 2000

In the early 2000s, there was a Detection Product finding its way into enterprise environments: Network IDS (intrusion detection system). IDS was very prone to false positives and it was very seldom that enterprises who deployed them had built proper workflows around their alerts. It makes sense, the network is an abstraction layer away from what happens on hosts, and suffers from all of the problems of signatures (i.e. a slight tweak and the signature won’t fire).

I guess you could call early endpoint anti-virus products a “detection” source, though most customers viewed them as prevention controls. Many had little to no logs at all. [Side note: I’m old enough to have once written perl scripts to pull logs from Sophos Anti-Virus so that I could do something about the malware it found, but couldn’t clean. Surprisingly back then, it was as simple as killing the malware process with pskill.exe from SysInternals (now part of Microsoft) and then deleting the .exe file, so my little “app” was able to clean up most of what Sophos left behind.]

Arcsight, the first SIEM (or was it “SIM?” - another topic for another day) company, was founded in 2000. I didn’t get exposure to them for a few years after that. The idea was great: rather than grep a bunch of different log files, we could just query a database. We could also turn those queries into email notifications if there was a new hit to the query logic. Just like that, alerts were born.

But the problem with SIEM back then wasn’t the tech, it was the people. Johnny Q. Network Administrator was suddenly given a new system to manage, in addition to the others: the SIEM. The problem is Johnny Q. didn’t know anything about what “bad” looked like. Johnny spent most of his time making sure lights on all of his servers and 1U rackmount network “appliances” (that’s a term seldom heard today, but once upon a time, it was as buzzwordy as “machine learning” is today) were blinking green. Availability was everything!

Johnny Q. Network Administrator was never going to be a Detection Engineer.

Johnny Q. Network Administrator was never going to be a Detection Engineer, and the consulting firms quickly figured that out. Professional Services engagements to build detection content and leave it behind, deployed at the customer became the primary source of Detection Content. For customers who realized a two week project to deploy some rules wasn’t good enough, Managed Services companies stepped up to fill in the gaps, offering to continually deploy detection content, very similarly to how they say they do it today.

In reality, most companies had no detections and no idea they were needed back then. Breaches would last well over a year before they were discovered, if they ever were. So the distribution of Detection Engineering talent looked something like this in 2000:

#The 2010s

Moving into the 2010s, we finally started to get data about breaches. Mandiant published their M-Trends reports annually and we suddenly had insight into just how bad the breach problem was. Back in 2011, the incidents Mandiant responded to had an average of 416 days before their presence was detected, which is much different than dwell times today.

Endpoint vendors McAfee and Symantec ruled the Detection content wars leading into 2010, but dropped off the rest of the decade (EDRs ruled then). Even so, McAfee and Symantec were still generally viewed as “preventions” and only started using “detection” language as they saw their market slipping to EDR. Adversaries knew their way around flipping bits to bypass AV signatures and keep going. Anyone smart knew AV signatures were exploding at the rate of hundreds to thousands new per day. It was untenable to imagine an AV engine growing at that rate without taking over your CPU (as they often did back then, anyway). Starting in 2011 and 2012, a new category was born: Endpoint Detection & Response (EDR). EDR players Carbon Black, CrowdStrike, and SentinelOne entered the game, which is why in the very first graph at the top of the page there’s an uptick in the green “product” lines. That’s also why the blue line (service providers) makes its initial change of direction downward: EDRs were doing the heavy detection work for them. Within a few years, Managed Service providers started leaning heavily on EDR detections, taking the burden off of them for building them. Very large enterprises, who seldom engage Managed Service providers, started doing the same.

The Big 4 firms grew nice portfolios around solving these problems for customers through a mix of detection writing and product deployments. More and more companies began to realize they needed 24x7 monitoring and didn’t have the staff to do it, so Managed Services firms also exploded, both in new companies and the size of existing.

DIY Detection Engineering (building detections in-house with employees) began spreading beyond the super large enterprises into the smaller to midsize enterprises.

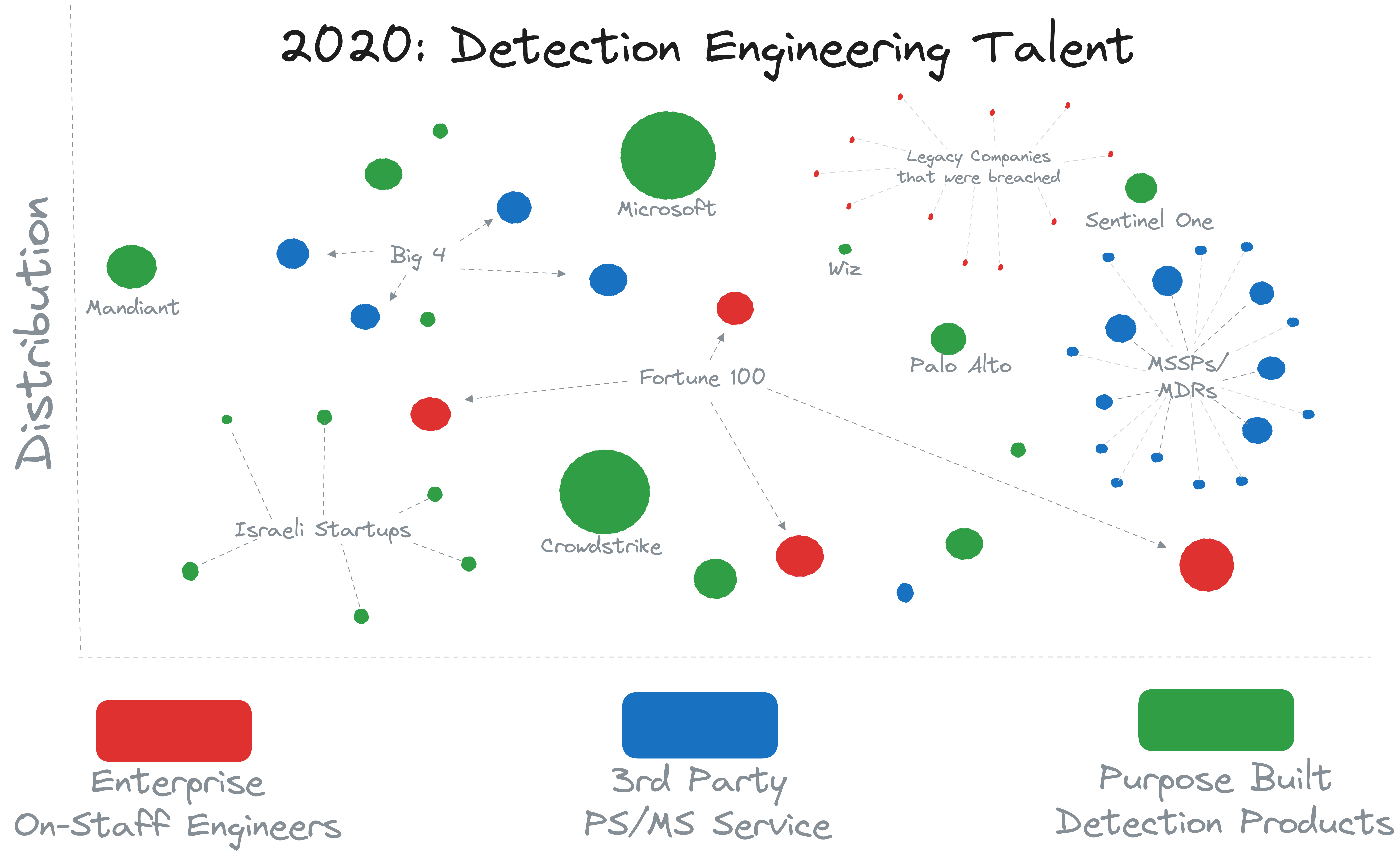

Overall, the Detection Engineering talent distribution looked something like this:

#The 2020s

Moving into the 2020s, our current decade, has been all about Detection content coming from purpose-built detection products. In 2020, Wiz was almost nothing compared to its ~23B value in 2024, but it was here, in addition to a myriad of startups coming out of Israel, many of which are focused on the Detection & Response lifecycle. There are now many EDR vendors, not just the original 3 (Carbon Black, CrowdStrike, and Sentinel One), including Microsoft Defender for Endpoint (if you get the proper licensing plan anyway). The Big 4 are still here and the Managed Service market has grown considerably, as well. It’s also worth noting other legacy security companies, like Cisco and Palo Alto, have joined this game with their own endpoint products. Palo Alto even has their own Managed Service for detection and response.

An interesting detail (which deserves its own future, full-length post) is how the DIY detection engineering process has developed. Super large enterprises still do it, maybe out of distrust of vendors, or due to bespoke needs, or possibly just because they have the budget and nobody is telling them not to? But smaller organizations have largely given this up. They may build a few or tune existing, but their primary source is external to their organization now. It’s simply too complicated to have one or two Full Time Equivalent (FTEs) working on a problem involving hundreds of adversary groups constantly changing tactics.

Arguably, many MSSPs should not be doing detection engineering either. Some claim they still do it, but in reality they simply ship the same Splunk rules they built in 2015, barely updated. Some fairly large MSSPs say they do detection engineering, but in reality they depend almost almost entirely on the consumption of product security alerts. A great way to tell if this is the case: if their “detection rules” look like “Vendor Name - High Severity” that’s a good indicator they’re just consuming and passing alerts through. Even with the argument of centralizing SOC resources to a single provider to be shared across dozens to hundreds of customer organizations, they are struggling to invest into the building of detections.

#Detections Engineering Investment

Speaking of investment, some hard numbers are always fun to take a look at.

CrowdStrike (CRWD), being publicly traded, reports on their R&D (research & development) spend. In 2024, R&D spend will be $778M! A super conservative estimate of 10% of that spend going towards “detection engineering” puts them at about $78M this year alone.

A large, leading [intentionally unnamed since they are not publicly traded] MSSP with several hundred midsize to large enterprise customers and over $300M ARR has approximately 15 FTEs whose primary job function is detection engineering. It’s probably a safe estimate to put each of them at $200K salary per year (fully burdened) on average. That brings their total spend on detection engineering to about $3M, more than 20x lower than CrowdStrike’s conservative estimate for detection engineering.

Compare that to a SUPER LARGE enterprise that I’m personally acquainted with who has about 5 FTEs doing detection engineering. Let’s assume that same $200K/each for a total of about $1M. This is a TOP size enterprise, not an average one, which would likely be much, much lower.

So to summarize:

- CrowdStrike > $78M

- Large MSSP ~ $3M

- Super Large Enterprise ~ $1M

Who do you think will deliver better detection content??

This gives us a very interesting picture about where the most resources are for building detections: at product companies, with services companies in a VERY distant second place, and enterprises trailing way behind that. This makes sense to me; it’s just like any other area of specialization in which the talent will tend to centralize in a handful of organizations. Or, to put another way: we would be more efficient as a cybersecurity industry if we have all our detection engineering experts at about ~100 product companies rather than at least 1 in the largest ~10M enterprises across the globe.

We would be more efficient as a cybersecurity industry if we have all our detection engineering experts at about ~100 product companies rather than at least 1 in the largest ~10M enterprises across the globe.

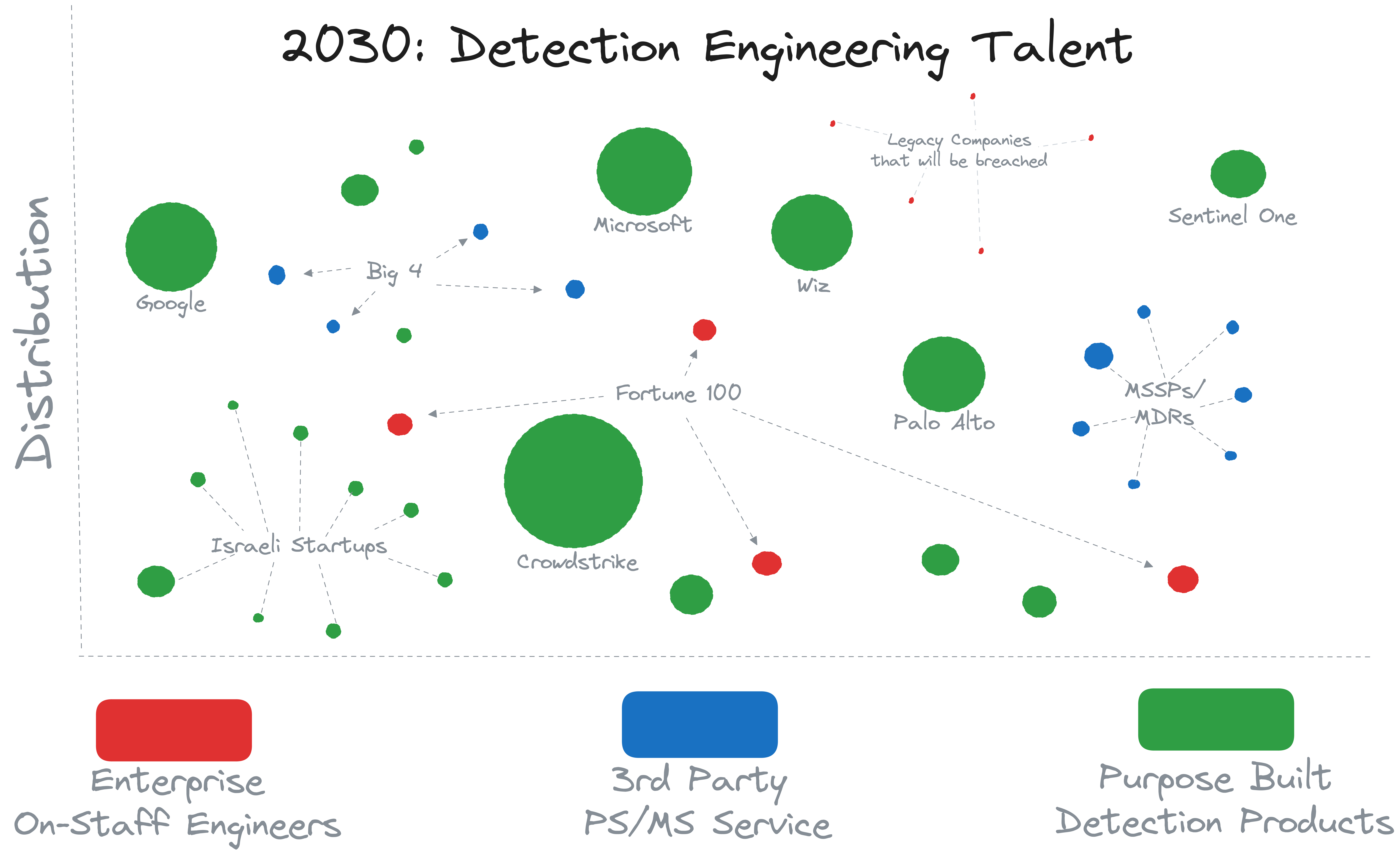

#2030 and Beyond …

What’s a little prediction among friends?

Looking at the current trend and guessing their trajectories, we will likely see the following …

Detection Products will continue to grab more and more market share and the detection engineering talent that goes with it. In 2024, we’re already seeing *DR (here at Wirespeed we refer to these as “Star D. R.” as in wildcard detection & response) products picking up.

The largest of enterprises will still do some Detection Engineering in house. Some smaller organizations will not change and will keep detections engineering in-house and will be breached because of it. Much like how in 2023 we saw many companies experience their self-managed VPNs as an initial access vector to ransomware, the theme is the same: if you choose DIY, you had better be not only good at it, but also diligent at it. The more resource-constrained you are, the more likely you won’t be diligent.

On the MSSP and MDR front, we’ll see the market split into two firm groups:

- MDRs that have their own EDR agent (read that as their own purpose-built detection product), and

- Those that don’t.

The first group will perform roughly the same as detection product companies.

The second group will largely stop investing in detections, for a variety of reasons:

- If their customers cannot afford to get *DR purpose-built detection products for the majority of their tech stack, they will experience a breach, and likely assert blame on the MDR provider.

- Even if that doesn’t happen, the MDR market largely suffers from differentiation (i.e. they all look like they’re doing more or less the same things) which means customers will push for better pricing, which in turn will mean human-led MDR offerings will cut corners to save labor and maintain margins.

The MDRs who see the most success in the second group (i.e. without their own EDR agent) will adopt the Wirespeed mindset: be best in the world at all of the steps AFTER the detection happens, and be so affordable that a customer can re-balance their budget to acquire more detection products.

#Wirespeed’s North Star

The history, the current landscape, and the forward trajectory are why we decided to create Wirespeed. We see this change towards the majority of Detections coming from products over services and DIY as inevitable, and we not only want to welcome it in, we want to be the catalyst for change to bring it here faster. We know there are some organizations that cling to their ability to build their own detections, and if that’s you, you probably hated half of what you read, if you even made it this far. That’s OK by us. We know there are many, many others who are ready for a shift change in how their budgets are spent.

This also means for Wirespeed to be successful at this, we have to be super-efficient in managing our costs, setting our prices, fighting uphill against the sales channel dynamics that have learned to enjoy long sales cycles for humongous multi-year MDR deals. We, on the other hand, aim to make trying us out easy, using us even easier still, and the decision to adopt the future the easiest decision yet.

If you’d like to balance out your spend to something more like the picture below, connect with us, and let us show you it can be done!

Want to know more about Wirespeed? Follow us on LinkedIn / X or join our mailing list.